A 127-qubit quantum processor has been used by an international team of researchers to calculate the magnetic properties of a model 2D material. They found that their IBM quantum computer could perform a calculation that a conventional computer simply cannot, thereby showing that their processor offers quantum advantage over today’s systems – at least for this particular application. What is more, the result was achieved without the need for quantum error correction.

Quantum computers of the future could solve some complex problems that are beyond the capability of even the most powerful conventional computers – an achievement that is dubbed quantum advantage. Physicists believe that future devices would have to combine about a million quantum bits (or qubits) to gain this advantage. Today, however, the largest quantum processor contains fewer than a 1000 qubits.

An important challenge in using quantum computers is that today’s qubits are very prone to errors, which can quickly destroy a quantum calculation. Quantum error correction (QEC) techniques can be used to deal with noise in a technique called a fault-tolerant quantum computing. This involves using a large number of qubits to create one “logical qubit†that is much less prone to errors. As a result, a lot of hardware is needed to do calculations and some experts believe that many years of development will be needed before the widespread use of this technique will be possible.

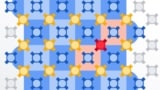

Now, however, a team led by researchers at IBM has shown that quantum advantage can be achieved without the need for QEC. The team used a 127-qubit quantum processor to calculate the magnetization of a material using a 2D Ising model. This model represents the magnetic properties of a 2D material using a lattice of quantum spins that interact with their nearest neighbours. Despite being very simple, the model is extremely difficult to solve.

Noise cancellation

The researchers used an approach called “noisy intermediate-scale quantum computationâ€, which has already been used to do some chemistry calculations. This is a race against time whereby the calculation proceeds quickly to avoid a build-up of errors. Instead of creating a universal quantum processor, the researchers encoded the Ising model directly onto the qubits themselves. They did this to take advantage of the similarities in the quantum mechanical nature of the qubits and the model being simulated – which led to a meaningful outcome without the use of QEC.

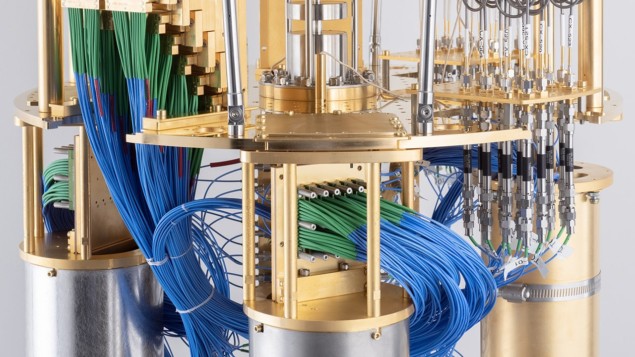

To do the calculation, the IBM team used a superconducting quantum processor chip that comprises 127 qubits. The chip runs quantum circuits 60 layers deep with a total of around 2800 two-qubit gates, which are the quantum analogue of conventional logic gates. The quantum circuit generates large and highly entangled quantum states that were used to program the 2D Ising model. This is done by performing a sequence of operations on qubits and pairs of qubits. High quality measurements were possible thanks to the long coherence times of the qubits and because the two-qubit gates were all calibrated to allow for optimal simultaneous operation.

Mitigation, not correction

These methods do remove a large part of the noise, but errors were still an important issue. To tackle this, the IBM team applied a quantum error mitigation process using a conventional computer. This is a post-processing technique that uses software to compensate for noise, thereby allowing for the correct calculation of the magnetization.

Breakthrough in quantum error correction could lead to large-scale quantum computers

The team’s quantum calculations showed a clear advantage over conventional computers, but this advantage is not completely related to computational speed. Instead, it comes from the ability of the 127-qubit processor to encode a large number of configurations of the Ising model – something that computers would not have enough memory to achieve.

IBM’s Kristan Temme, who is co-author of a Nature paper that describes the work, believes that the research is a decisive step towards the implementation of more general near-term quantum algorithms before fault-tolerant quantum computers become available. He says that the team has shown that it is possible to obtain accurate expectation values of the model system from circuits that are only limited by the coherence time of the hardware. He calls their method for quantum error mitigation “the essential ingredient†for such applications in the near future. “We are very eager to put this new tool to use and to explore which of the many proposed near-term quantum algorithms will be able to provide an advantage over current classical methods in practiceâ€, he tells Physics World.

John Preskill at the California Institute of Technology in the US, who was not involved in this research, says that he is “impressed†by the quality of the device performance, which he thinks is the team’s most important achievement. He adds that the results strengthen the evidence that near-term quantum computers can be used as instruments for physics exploration and discovery.